[adrotate banner=”4″]

Breaking the 80 Gbps barrier!!!

The long wait for real-world 1072 performance numbers is almost over – the last two VMWARE server chassis we needed to push the full 80 Gpbs arrived in the StubArea51 lab today. Thanks to everyone who wrote in and commented on the first two reviews we did on the CCR-1072-1G-8S+. We initially began work on performance testing throughput for the CCR1072 in late July of this year, but had to order a lot of parts to get enough 10 Gig PCIe cards, SFP+ modules and fiber to be able to push 80 Gbps of traffic through this router.

Challenges

There have been a number of things that we have had to work through to get to 80 Gbps but we are very close. This will be detailed in the full review we plan to release next week but here are a few.

- VMWARE ESXi – LACP Hashing – Initially we built LACP channels between the ESXi hosts and the 1072 expecting to load the links by using multiple source and destination IPs but we ran into issues with traffic getting stuck on one side of the LACP channel and had to move to independent links.

- CPU Resources – TCP consumes an enormous amount of CPU cycles and we were only able to get 27 Gbps per ESXi host (we had two) and 54 Gbps total

- TCP Offload – Most NICs these days allow for offloading of TCP segmentation by hardware in the NIC rather than the CPU, we were never able to get this working properly in VM WARE to reduce the load on the hypervisor host CPUs.

- MTU – While the CCR1072 supports 10,226 bytes max MTU, VMWARE vswitch only supports 9000 so we lost some potential throughput by having to lower the MTU by more than 10%

Results preview

Here is a snapshot of where we have been able to get to using 6.30.4 bugfix so far

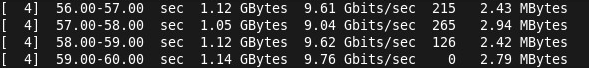

Single TCP Stream performance at 10 Gig in iperf at 9000 bytes MTU (L2/L3)

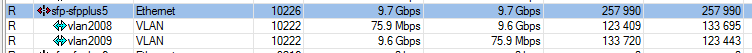

Throughput in WinBox

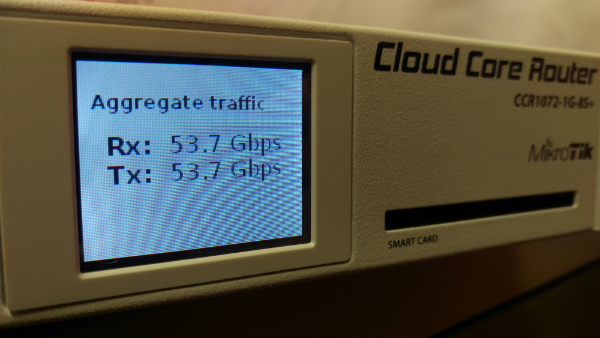

Max throughput so far with two ESXi 6.0 hosts (HP DL360 G6 with 2 Intel Xeon x5570 CPUs)

Two more servers arrived today – Full results coming very soon!

Once we hit the performance limit at 54 Gbps, we ordered two more servers and they just arrived. We will be racking them into the lab immediately and should have some results by next week. Thanks for being patient and check back soon!!

Nice work. Does someone use ccr1072 in a real production environment with 10Gbps links?

I’m not aware of anyone who has it in production yet. We have several customers evaluating it as a potential core router, but haven’t actually put any with live traffic. Although, if the testing we have done is any indication, these routers will be able to handle insane amounts of traffic.

We at starnet sh.p.k use ccr1072 for our bgp router