Overview

This is an article i’ve wanted to write for a long time. In the last decade, the work that I have done with WISPs/FISPs in network design using commodity equipment like MikroTik and FiberStore has yielded quite a few best practices and lessons learned.

While the idea of “router on a stick” isn’t new, when I first started working with WISPs/FISPs and MikroTik routers 10+ years ago, I immediately noticed a few common elements in the requests for consulting:

“I’m out of ports on my router…how do I add more?”

“I started with a single router, how do I make it redundant and keep NAT/peering working properly”?

“I have high CPU on my router and I don’t know how to add capacity and split the traffic”

“I can’t afford Cisco or Juniper but I need a network that’s highly available and resilient”

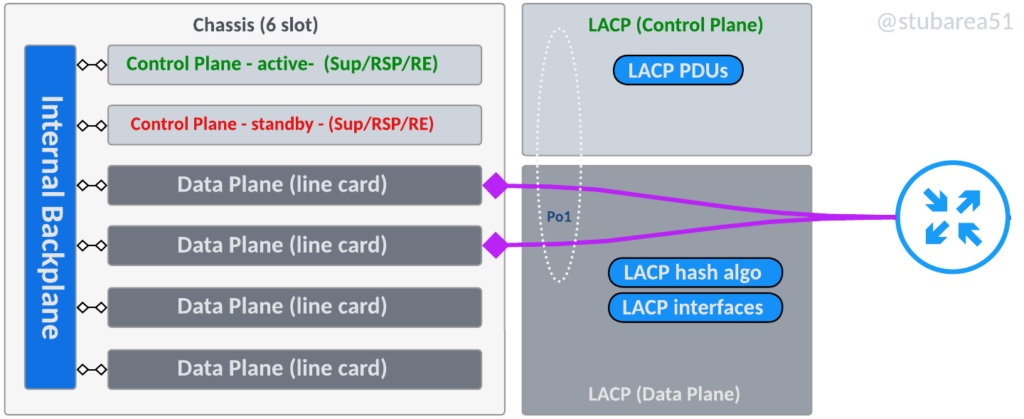

Coming from a telco background where a large chassis was used pretty much everywhere for redundancy and relying on links split across multiple line cards with LACP, that was one of my first inclinations to solve the problem.

Much like today, getting one or more chassis-based routers for redundancy was expensive and often not practical within budgetary or physical/environmental constraints.

So I started experimenting with switch stacks as an inexpensive alternative to chassis-based equipment given that they operate using similar principles.

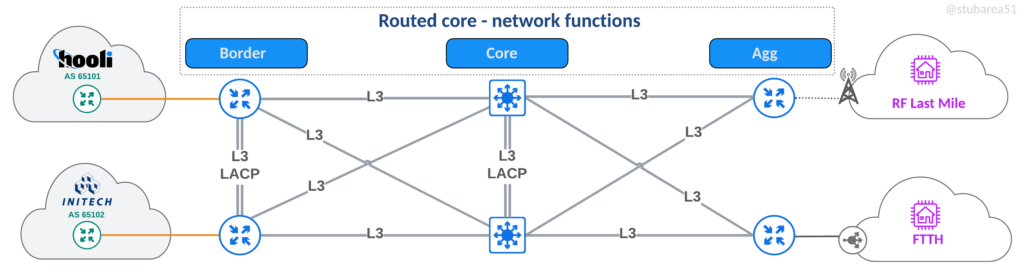

Let’s start with an overview of common Layer 3 network topologies in the WISP/FISP world.

WISP/FISP Topologies

Routed

This is the most traditional design and still widely used in many small ISPs. Physical links plug directly into the router or switch that handles the corresponding network function.

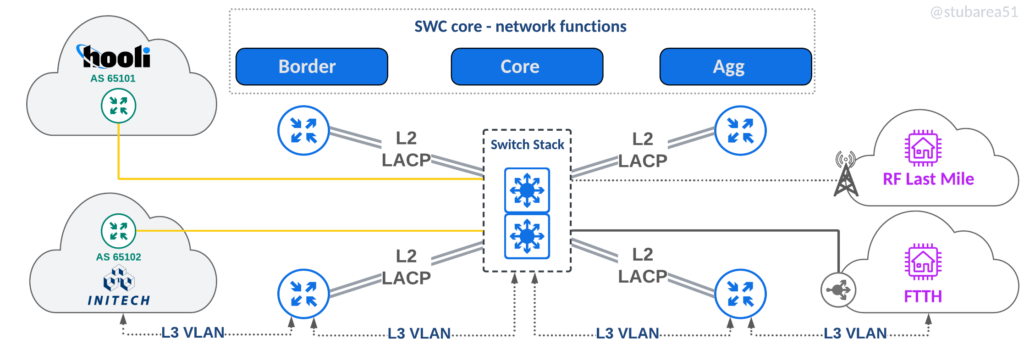

Switch Centric (SWC)

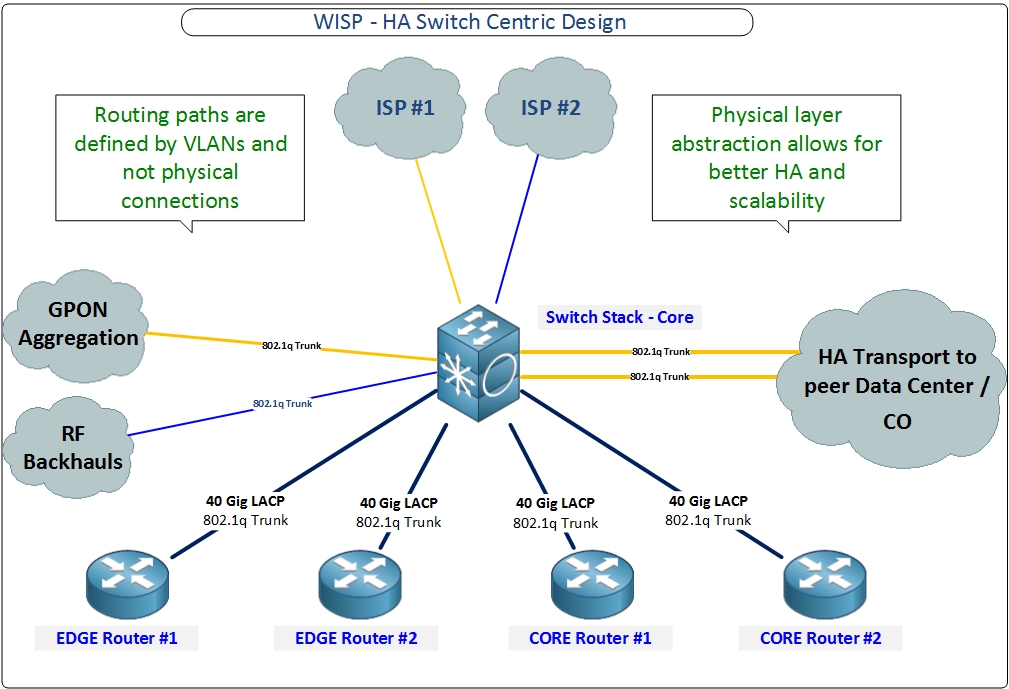

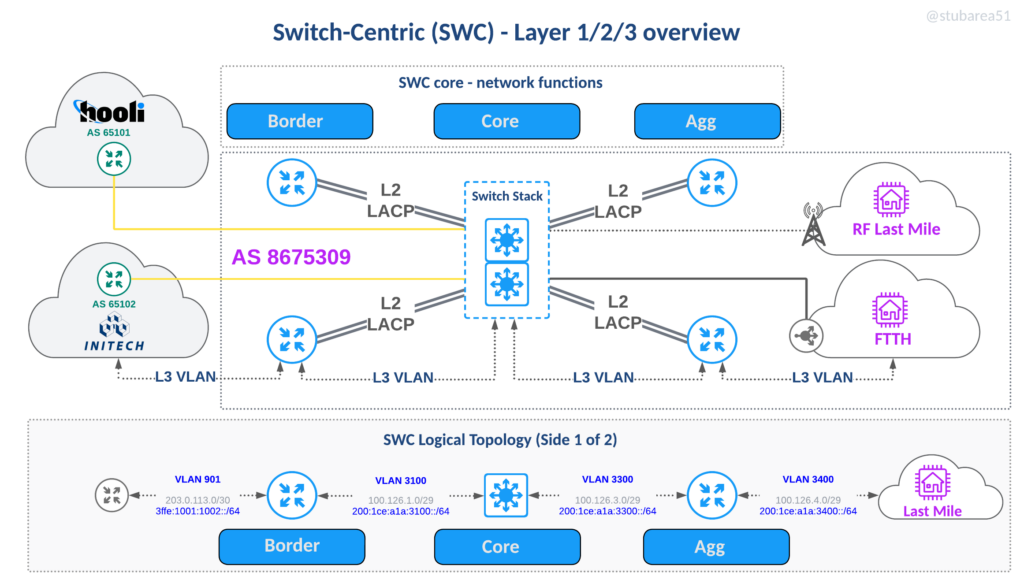

The main focus of this article, the SWC topology uses a switch stack that all links plug into and L3 paths are logically defined by VLANs.

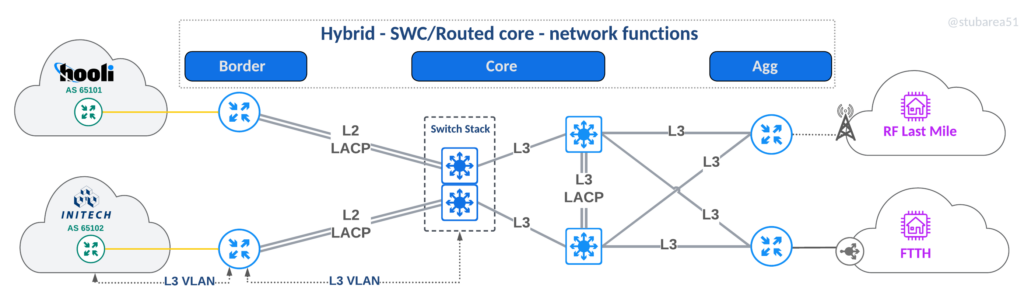

Hybrid – SWC/Routed

An approach used when both routed and SWC make sense – often used for border routers with just a few 100G ports to extend port capacity in a scalable way.

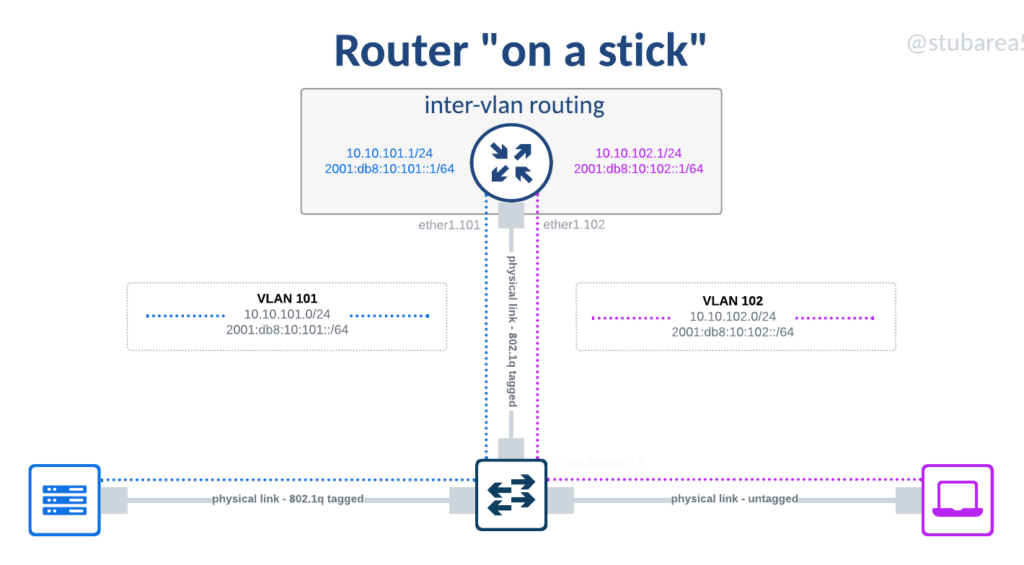

SWC begins with router on a stick

Before diving into the origins and elements of switch centric design (SWC), it’s probably helpful to talk about what “router on a stick” is and how it relates to the design.

The term router on a stick refers to the use of a switch to extend the port density of a router. In the early days of ethernet networking in the 1990s and 2000s, routers were incredibly expensive and often had only one or two ports.

Even as routers became modular and cards could be added with a few more ports, it was still very expensive to add routed ports. Switches by contrast, were much cheaper and could easily handle up to 48 ports in most vendors.

It became a common practice to use a single routed port or a pair of them bonded with LACP and establish an 802.1q tagged link to a switch so that different Subnets/VLANs could be sent to the router for inter-vlan routing or sent on to a different destination like the Internet.

One of the drawbacks to this approach was the speed of the router. 20 years ago, outside of larger chassis-based platforms, routers didn’t typically exceed 100 Mbps of throughput and many were much less than that.

As such, the practice of using “router on a stick” began to fall out of favor with network designers for a few reasons:

1. The advent of L3 switching – as ASICs became commonplace in the 2000s and leading into the 2010s, routers weren’t needed at every L3 point in the network anymore. This improved performance and reduced cost.

2. Layer 2 problems – 20 years ago, it was harder to mitigate against L2 issues like loops and broadcast storms because not all devices had L2 protections that almost every managed switch now has like storm control, MSTP, mac-based loop guards, etc.

Why router on a stick for WISP/FISP networks?

The short answer lies in the use of commodity routers like MikroTik and Ubiquiti for WISP/FISP networks.

Both vendors are popular with WISPs as they bring advanced feature sets like MPLS and BGP into routers that cost only hundreds of dollars. The flagship models even today don’t exceed several thousand dollars.

As small ISPs moved from 1G to 10G in their core networks roughly 10 years ago, a number of routers were released from both vendors with a limited number of 10G ports.

A MikroTik CCR1036-8G-2S+ router that became one of the most popular SWC routers

It quickly became apparent in the WISPs/FISPs that I worked with that more 10G ports were needed to properly connect routers, radios, servers and other systems together.

Although the driver for using router on a stick was port density, I’d later discover a number of other benefits that are still relevant today.

Before getting into the benefits of connecting all devices to the switch, switch stacking is the next component of the journey in SWC design.

Adding switch stacking

Router on a stick was only part of the equation as we worked through early iterations of the design – adding high availability was another needed component.

Adding multiple independent switches to routers that had ports tagged down to the switch became messy because L2 loop prevention was needed in order to manage links.

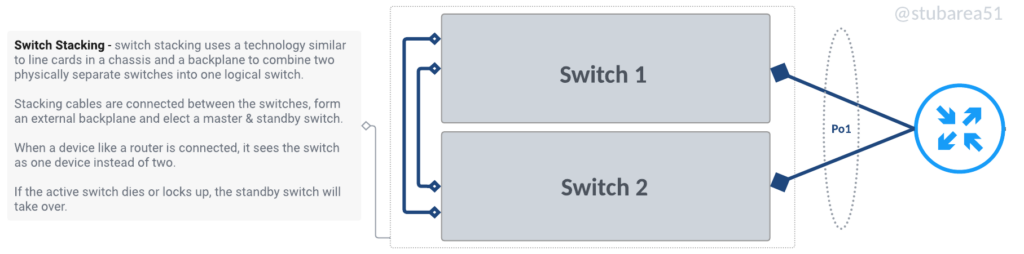

What is a switch stack?

Switch stacking allows multiple switches to be combined into one logical switch using either a backplane or frontplane via dedicated cabling instead of a circuit board like you’d find in a chassis.

Fiber Store has a great blog article about it and the difference between stacking and MLAG.

Switch Stacking Explained: Basis, Configuration & FAQs | FS Community

Types of switch stacking

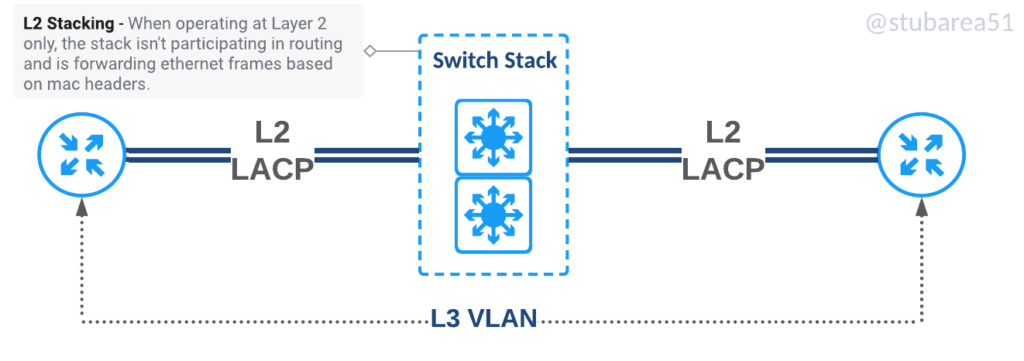

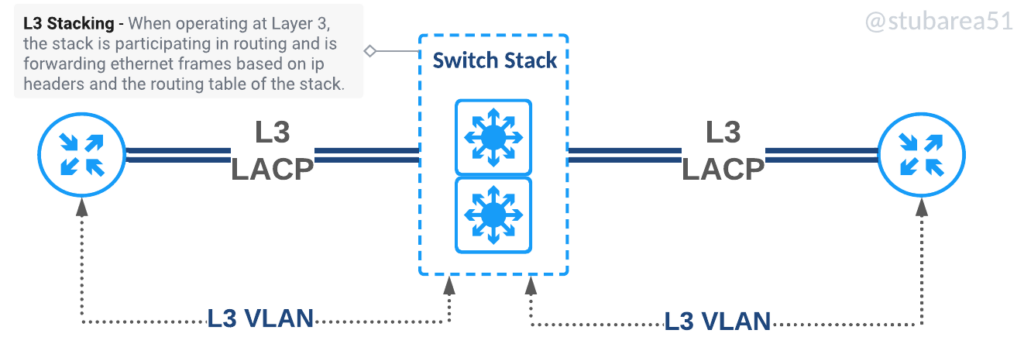

There are two main types of switch stacking. Those that allow stacking of layer 2 only and those that allow stacking of layer2 and layer3. Our earliest designs used only L2 stacking as it was less expensive to build but as the cost of switches that support stacking and L3 came down in the mid-2010s, it became easier use Layer 3 stacking going forward.

Stacking and Backplanes

The idea of backplanes in computing goes back to at least the mid 20th century. It’s an efficient way to tie together data communication into a bus architecture.

in this context, a bus is defined as:

“a group of electrical connectors in parallel with each other, so that each pin of each connector is linked to the same relative pin of all the other connectors” source: wikipedia

Here is a wire-based backplane from a 1960s computer.

Chassis backplane

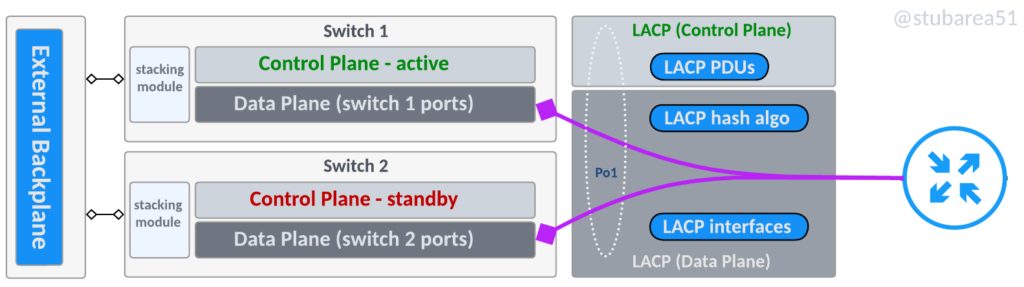

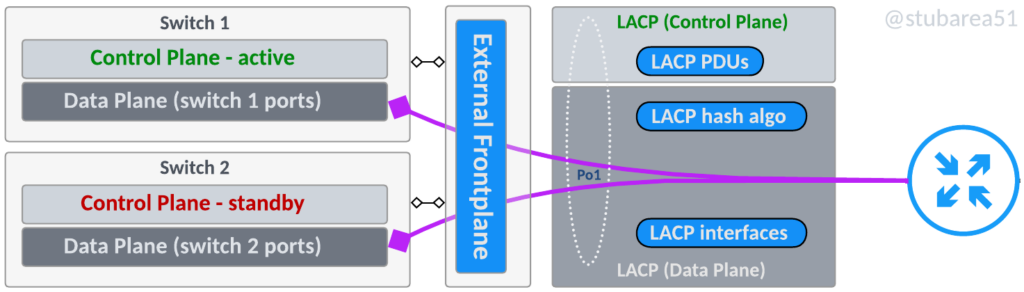

Does a switch stack actually have a “backplane” or is it a ring?

When only two switches are stacked, technically it’s both but as the number of switches increase, stack connectivity becomes more of a ring than a true bus architecture like a chassis backplane.

In the context of this article, it’s helpful to think about the “backplane” as the communication channel that ties the switches together and carries traffic between switches as needed.

Some vendors refer to the stack communication as a backplane, a frontplane, a channel, a ring, stacking links, etc

Even though certain stack configurations don’t exactly fit the definition of a backplane with a bus, it’s a common reference and we’ll illustrate the two main types of switching planes.

Stacking backplane

With two switches, this topology is closest to a chassis backplane and uses dedicated stacking modules with proprietary cabling. Most switch manufacturers have moved away from this design towards a “frontplane”

Stacking frontplane

A stacking frontplane uses built in 10G, 25G, 40G or 100G ports to establish the stack communication for the control plane and the data plane.

The ports are configured for stack communication in the CLI, typically when the switches are setup and cannot be used or configured for specific IPs or VLANs as the switch manages all communication internally.

Chassis vs. Stack

If the underlying architecture between chassis and stacking is so similar, why not just use stacking everywhere?

This is where the business context comes into play. There are several advantages that a chassis has over stacking.

Chassis advantages

- Failover time – usually a control plane failure or line card failure is almost immediate and sometimes even lossless. Stacking usually takes longer to recover from failure and is typically in the realm of 15 to 30 seconds for stack switchover

- Upgrades – upgrading software while certainly a more complex operation in a chassis is often less impactful as the control plane modules can be updated one at a time and line cards are often designed to be upgraded while in service without disruption.

- Physical redundancy – chassis based devices are modular and typically have more options for redundancy like power and fan modules.

- Throughput – when large amounts of throughput are needed, chassis based devices have more scalability in their internal backplanes where stacking has more limited throughput capabilities between switches.

However, chassis are not without drawbacks. Stacking has several advantages that are hard to overlook for WISP/FISP designs.

Stacking advantages

- Cost – stackable switches are typically orders of magnitude cheaper than getting a chassis with linecards for control plane, data plane and modules for power and fans

- Power – stacking is almost always more power efficient than a similarly sized chassis

- Space – stackable switches can consume as little as 2RU as compared to 4U or more for chassis based devices

- Environmental – chassis are often intended to be put into a climate-controlled DC, CO or enclosure and given that WISP/FISP peering points aren’t always inside a DC but at the base of a tower or other last mile location, getting equipment that is easier to manage heat/cooling is a significant contributing factor.

- Simplicity – stacking is much simpler than chassis-based operations because there are fewer elements to coordinate and upgrades, while service impacting, are typically much easier to perform.

Why not just get a used chassis off of ebay from Cisco/Juniper/Nokia?

This was a popular option when I first started working in the WISP field but has fallen out of favor for a few reasons:

- No hardware Support

- No software Support

- Security concerns with counterfeit and compromised equipment

- Cost rising due to equipment shortages

- Power draw

History of switch centric in WISP/FISP networking

2014 (Origin of the term)

The term switch-centric or SWC goes back to 2014 when we began to use switch stacks to overcome limited 10G ports in MikroTik routers like the CCR1036-8G2S+.

I coined and started using the term “switch centric” in consulting because it was easier (and slightly catchier) to explain to entrepreneurs and investors.

2015 (Use of Dell 8024F as a switching core)

The Dell 8024F was one of the first switches we used for stacking. It was inexpensive, readily available and software updates were free.

2016 (Technical drawing for WISPAPALOOZA)

In this version of the design, there are some notable differences with the current design. The first is the lack of L3 switching in the core switch stack. In 2016 and prior, there were fewer switch stacks that supported routing and were available at a price point similar to that of MikroTik routers.

2017 to 2022 (Dell 4048-ON core and move to L3 switching)

The 4048 series was a step up from the 8024F as it has far better L3 capabilities and more ports. It was inexpensive and readily available until 2020 where the chip shortage started to affect supply.

2020 to now (FS 5860 series)

Fiber Store took a while to make a solid L3 stackable switch and we tried several models before the 5860 series with mixed results. However, the 5860 series has really become a staple in SWC design. It’s reliable and feature rich. It’s probably the best value in the market for a stackable switch.

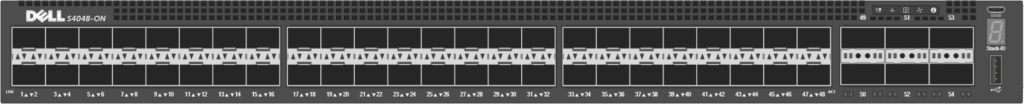

Depending on the port density and speeds required, I currently use both models in SWC design.

FS5860-20SQ

20 x 10G, 4 x 25G, 2 x 40G

FS5860-48SC

48 x 10G, 8 x 100G

Switch Centric Design

The SWC design utilized today is the result of a decade of development and testing on hundreds of WISPs/FISPs in prod to fine tune and improve the design.

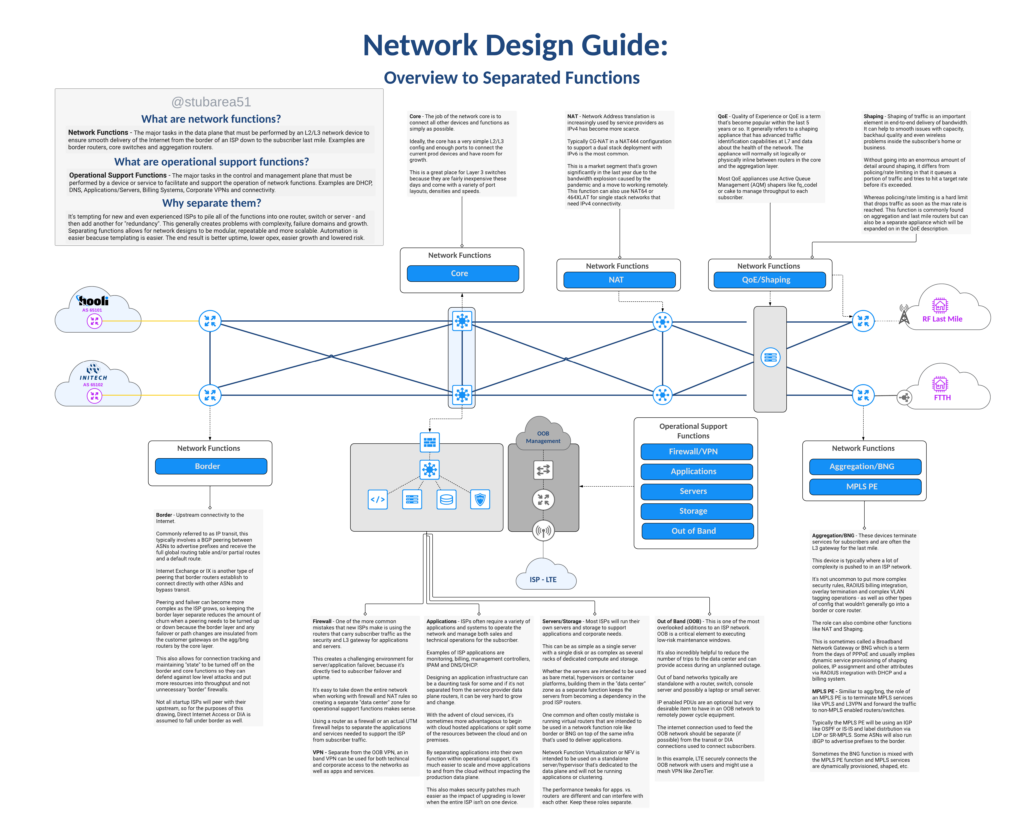

One of the design elements that grew with the SWC design is the idea of separation of network functions. It’s a network design philosophy that embraces the idea of modularity and simpler config by not using one or two devices to do everything.

I previously covered separation of network functions in a blog post if you want to go deeper into the topic.

In reviewing the latest iteration of the SWC design, you’ll see the functions outlined and how they fit into the network.

The logical topology in the drawing illustrates how VLANs are used to define routed paths just like the router on a stick diagram from earlier in the article.

For simplicity, the logical drawing only shows one side of of the redundant design to simplify the concepts. This includes the first border router, switch stack and first agg router.

Benefits of SWC:

There are many benefits of SWC design and in my opinion, they far outweigh the tradeoffs for many WISPs/FISPs looking for economical hardware and flexible operations without sacrificing high-availability.

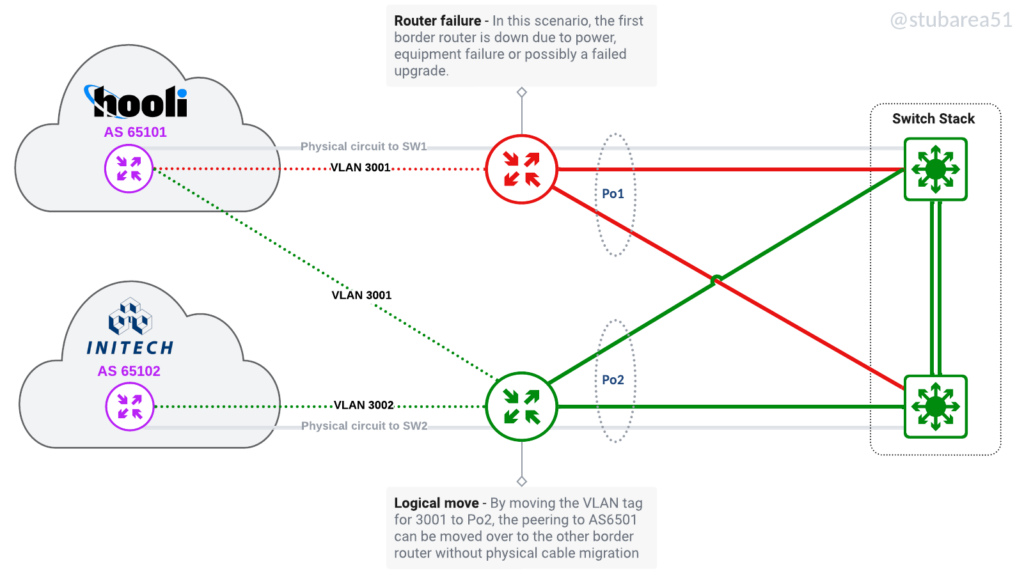

Logically move circuits.

Many WISPs/FISPs serve rural areas and backhaul their traffic to a data center that may be several hours away. The ability to move traffic off of a failed router and onto another remotely is a key advantage of the SWC design.

By moving the ptp VLAN for the transit or IX peering onto another router, the traffic can be restored in a matter of minutes vs. hours and doesn’t involve a physical trip to the DC.

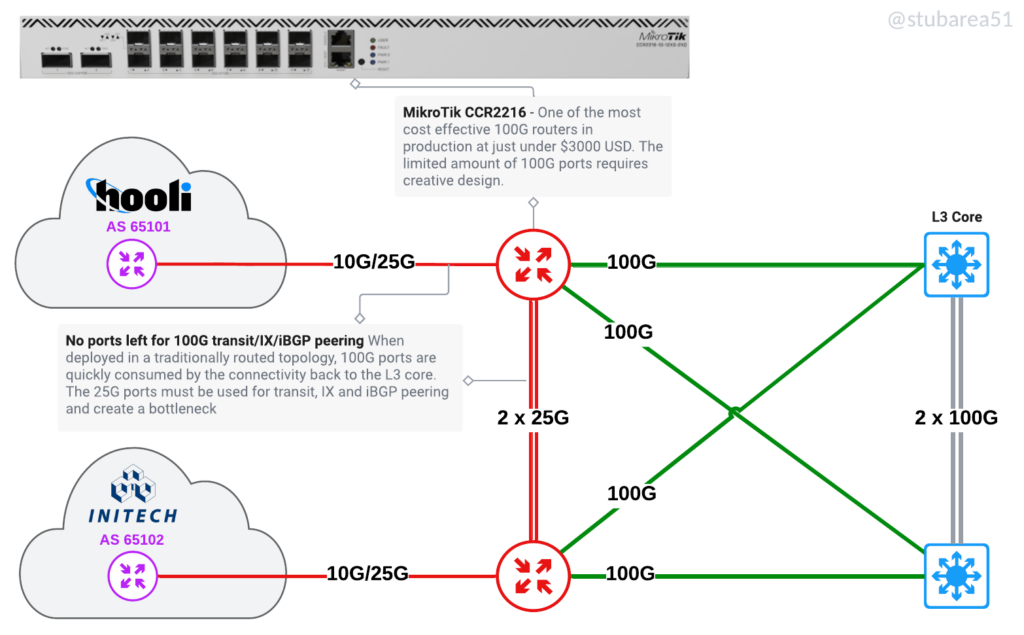

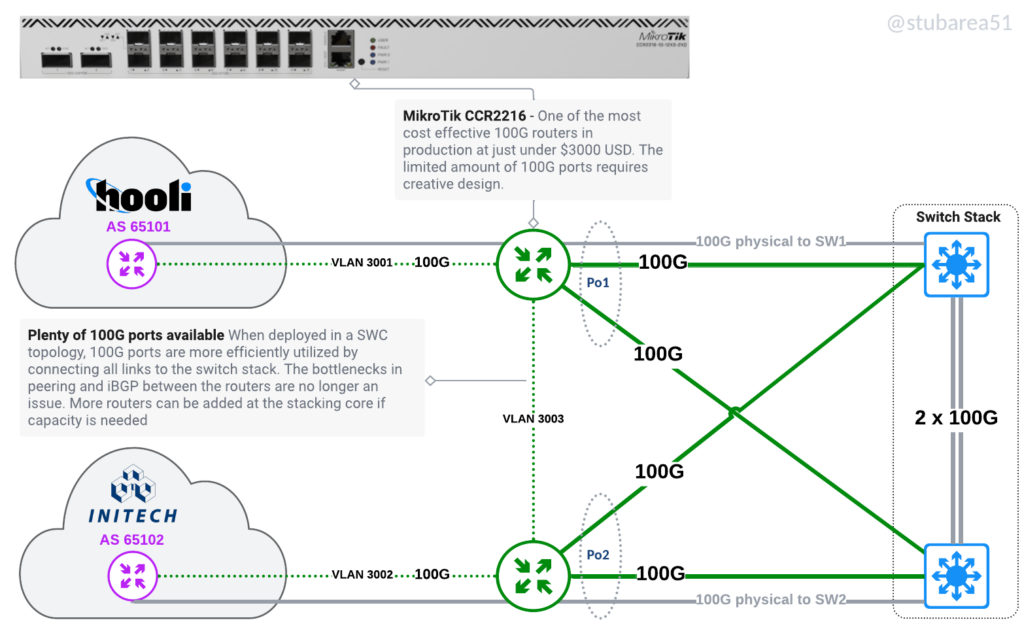

Better utilize routers with low density of 10G/25G/100G ports

One of the original drivers for the SWC design was adding port density for 10G by using the switch stack as a breakout. Now that inexpensive 100G routers are available, we have the same challenge with 100G as most of the more cost effective routers have very few 100G ports.

These are two of the routers I most commonly use when cost-effective 100G is required for peering or aggregation

MikroTik CCR2216-1G-12XS-2XQ (2 x 100G)

Juniper MX204 (4 x 100G)

The limitations of having a low 100G port count in a traditionally routed design.

The same routers in a SWC design

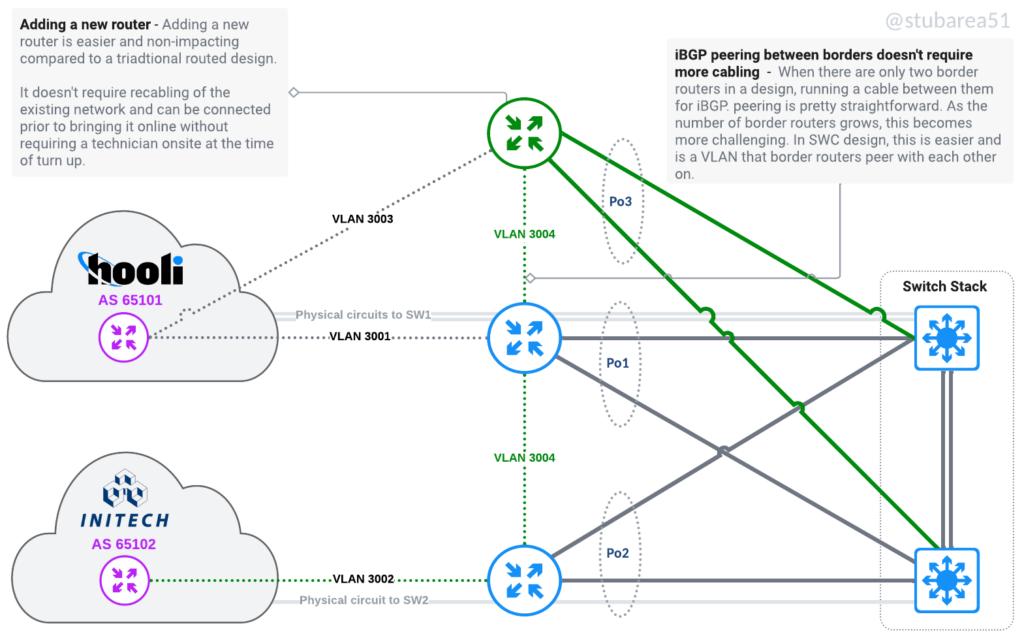

Adding or upgrading routers

Adding a new router or upgrading a router with a bigger one becomes simpler. It doesn’t impact production at all because you can setup an LACP channel from the new router to the switch and then configure it remotely when you’re ready. No need to keep moving physical connections around as each layer grows.

If a router is being replaced, config migration is also easier – because everything is tied to VLANs and the VLANs are tied to an LACP channel. Migrating a config with thousands of lines only requires 2 to 3 lines of changes – the physical ports that are LACP members.

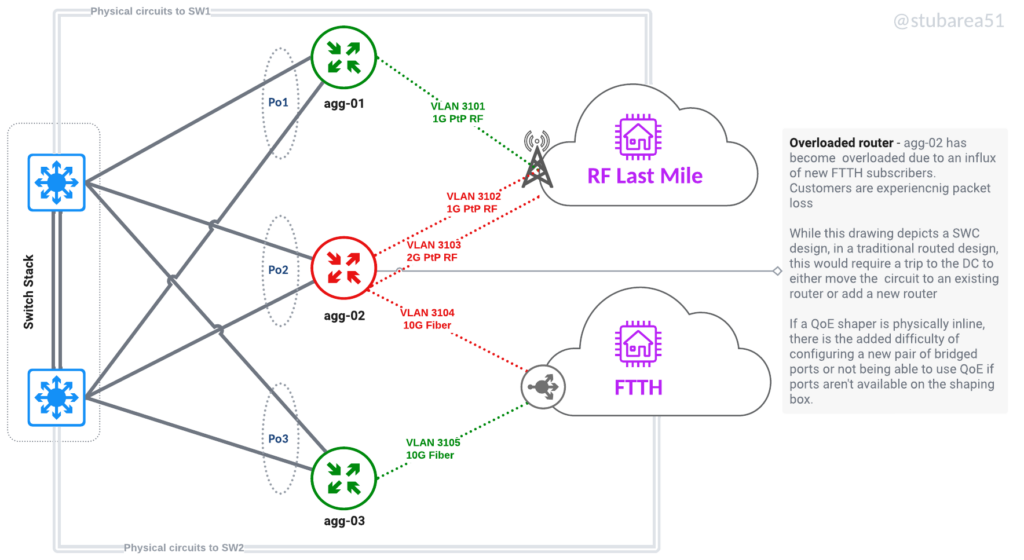

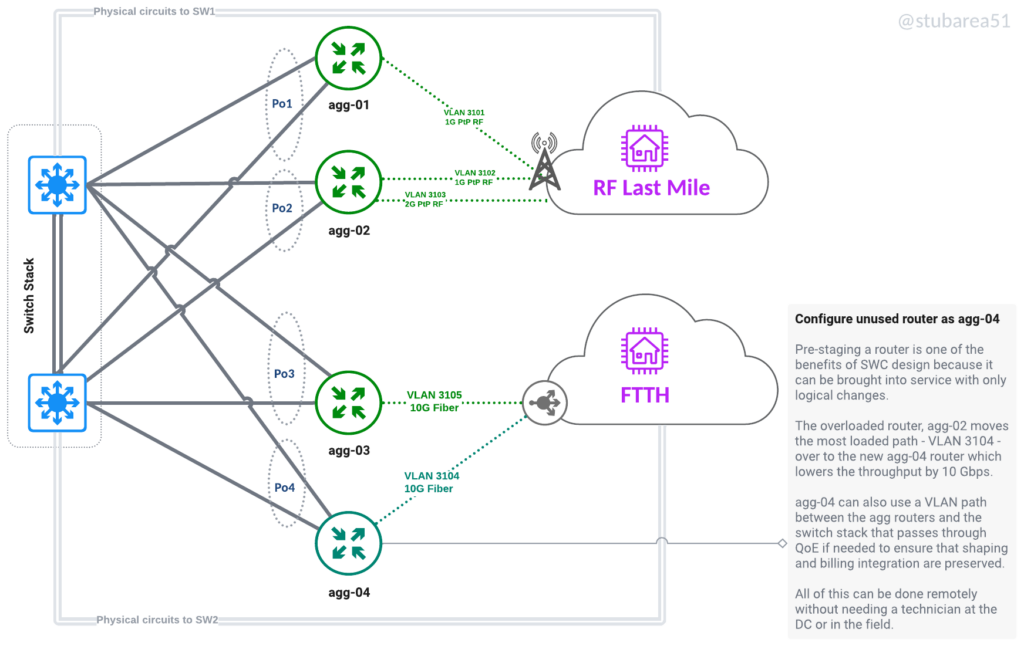

Move workloads logically to efficiently use capacity

Move workloads between routers without recabling or even going onsite. Keep a spare router online and ready to replace a failed one. Move a router that is maxxed out to a different role like from border to VPN aggregator. Also moving last mile connectivity logically allows for aggregation capacity to be more efficiently spread across routers.

If a router is being replaced, config migration is also easier – because everything is tied to VLANs and the VLANs are tied to an LACP channel. Migrating a config with thousands of lines only requires 2 to 3 lines of changes – the physical ports that are LACP members.

Move workloads logically to efficiently use capacity

Move workloads between routers without recabling or even going onsite. Keep a spare router online and ready to replace a failed one. Move a router that is maxxed out to a different role like from border to VPN aggregator. Also moving last mile connectivity logically allows for aggregation capacity to be more efficiently spread across routers.

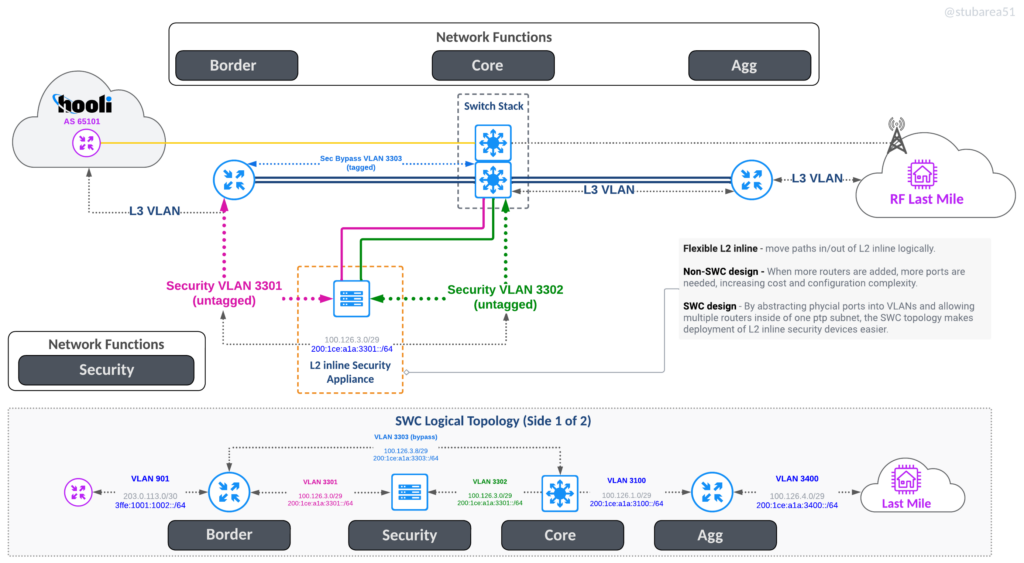

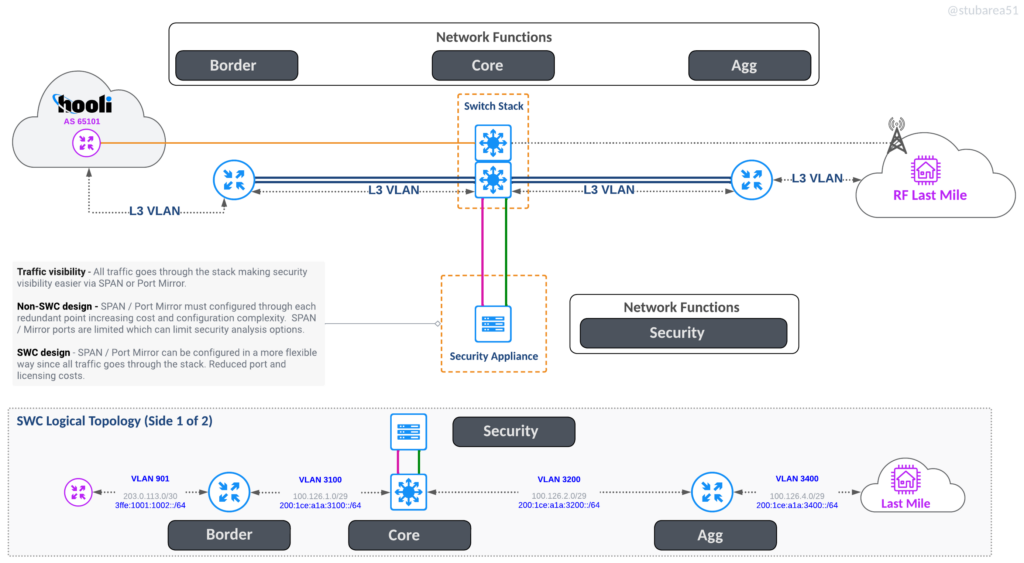

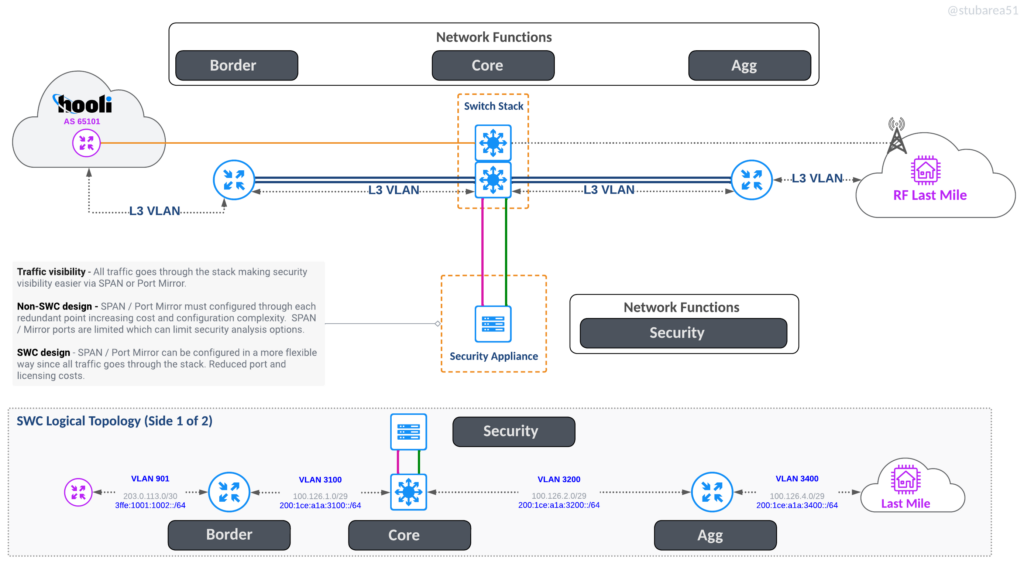

Security Appliances

Inserting security appliances and visibility in path is much easier because all traffic flows through the stack. This also allows the switch to drop attacks in hardware before it hits the border router if desired.

L2 inline appliance

One Arm Off appliance

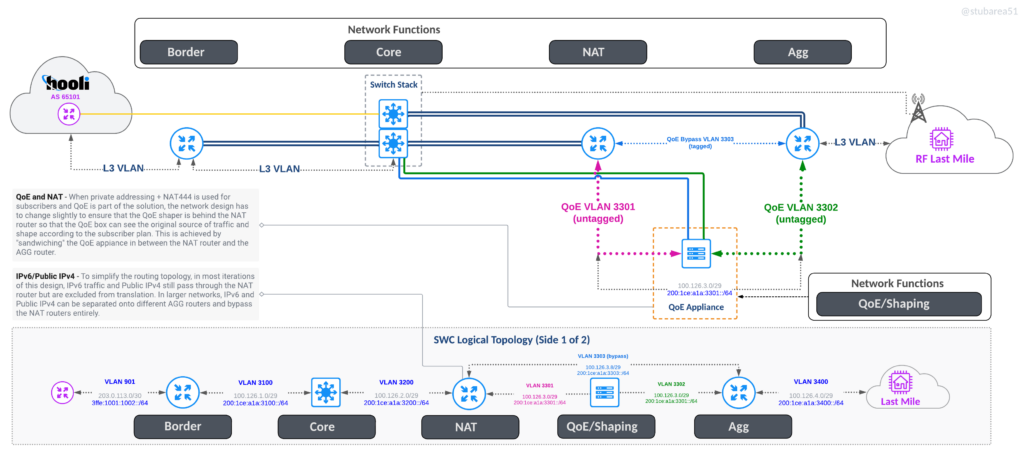

QoE

Inserting QoE is easier because you don’t have to worry about how many ports you need – just use 2 x 10G , 2 x 40G or 2 x 100G and hang it off the core stack with offset VLANs to force routing through it

QoE without NAT

QoE with NAT

Other benefits

Simplified cabling – everything goes to the core switches in pairs except for single links like a transit circuit- Add link capacity without disruption or need to configure more routing. You can go from 2 x 10G to 4 x 10G LAG in a few minutes without having to add more PTP subnets, routing config and/or ECMP.

OPEX Value – start up and small ISPs derive a lot of value from being able to utilize a design that minimizes the “truck rolls” required to modify, extend and operate the network. The more logical changes can be made instead of physical, the more value the ISP is getting in OPEX savings.

Incremental HA – Defer the cost of a fully “HA” design by starting with (1) border router, (1) core switch and (1) agg router and then adding the redundant routers at a later time with minimal cabling challenges or disruption. Because the HA design is already planned for, it’s a pretty easy addition.

Unused / cold standby routers can be used for on-demand tasks like mirror port receiver for pcap analysis.

Why use a chassis or a stack at all – what about using small 1U/2U boxes in a routed design along with MPLS?

This is a good question and if you go back to the network topologies section, you’ll see that listed as one of the topologies because it’s a valid option.

SWC depends on abstraction of the physical layer which can also be accomplished using MPLS capable routers. Some of the more advanced MPLS overlay/HA technologies like EVPN multihoming are only available on much more expensive equipment although whitebox is starting to change that.

When selecting a design for a WISP/FISP, it really depends on a lot of different factors that are technical, financial and even geographic.

Consider these scenarios:

Urban FISP

A more Urban FISP that operates in smaller geographic area may not have the same cost to add cabling to a router instead of adding a VLAN in a SWC design. Going further, if they don’t use QoE or NAT and are selling only 1G residential Internet access, a purely routed design with MPLS could make sense.

Rural WISP

However, a rural WISP may have 100 times (or more) the geographic area to cover and the cost of sending a technician to a site to add cabling vs. adding a VLAN remotely could end up significantly raising OPEX when it’s done regularly. Add to that a need for QoE and the ability to add capacity remotely if the data center is several hours away – a common scenario in rural ISPs.

Remote WISP

In the most extreme example, some of the WISP/FISPs I work for operate in extremely remote locations like Alaska and may require a helicopter flight to add a cable or other physical move. The ability to make logical changes can be the difference between Internet or no Internet for weeks in a location like Alaska.

Where things get murkier is the cost of 100G ports for peering, SWC design saves quite a bit of money by using peering routers with low 100G port density but making the rest of the network SWC is challenging because very few 100G capable stacks exist.

This is why I came up with a “Hybrid SWC Design” where the peering edge is SWC and the rest of the network is routed with MPLS. It’s the best of both worlds and allows MPLS to provide the abstractions I’d otherwise use SWC for.

SWC Tradeoffs:

There are always tradeoffs when making design choices and SWC has tradeoffs and limitations like any other network deisgn.

– single control plane (vs dual control plane with MLAG)

– upgrade downtime

– traffic passes twice through the switching backplane (not as big of an issue in modern switches)

– harder to find stacking for 100G and beyond

– harder to find advanced features like MPLS if needed

For context, most of these tradeoffs are used as a reason to avoid stacking in large enterprise or carrier environments. But as with all things in network engineering, it depends.

What’s frequently overlooked in using stacking for WISPs/FISPs is the business value of a switch centric design compared to the network engineering trade-offs. Network engineers are quick to jump into the limitations of stacking but forget to consider the comparative value of the benefits to OPEX.

Where to put your money?

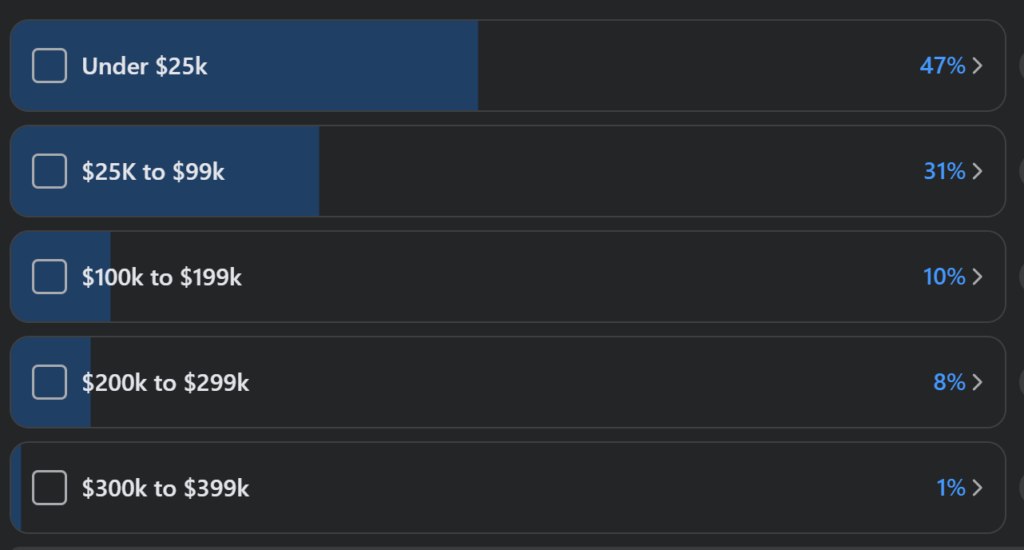

I put out a survey on the Facebook group WISP Talk in April 2023 to get an idea of how much WISPs/FISPs spent in year 1 for their total budget to include CAPEX and OPEX. While not perfect or likely scientifically accurate, it’s still pretty enlightening.

48 ISPs responded and the results are listed below:

78% spent under $100k in year 1. That means equipment, services, tower leases, duct tape, etc all have to be accounted for.

It’s easy as a network geek to sit and argue for the merits of specific protocols, equipment and design as the “right way” to do something in network engineering.

It’s much harder to determine if that path is the “right way” at the right time for the business.

Since the context of this article is focused on WISPs and FISPs, getting a new chassis with support is very expensive. Even getting a new 1RU device from a mainstream vendor is expensive.

Fiber is becoming a part of most WISP deployments and is considered much more of an asset than what brand of network gear is being used.

It’s much easier, financially speaking, to buy different network gear in year 2 or 3 than to run new fiber over any distance so minimizing the cost of the network equipment is often a primary driver for new WISPs/FISPs in year 1.

This is where SWC design really shines – not only can it meet the budget target, it’s not going to require a drastic redesign in 12 months.

The most precious commodity startups have is often not money but time. Being able to avoid trips to the DC or remote sites is invaluable in small start-ups with one or two people. Even as the team grows, avoiding unnecessary trips can accumulate into significant cost savings over a year.

Assuming a $100K budget, if the network core and first tower/fiber cabinet can be kept to 10% or less of the total budget, that’s a win.

SWC Bill of Materials

$10,000 is unlikely to get a single router or switch from major vendors to say nothing of building out an entire DC and service location.

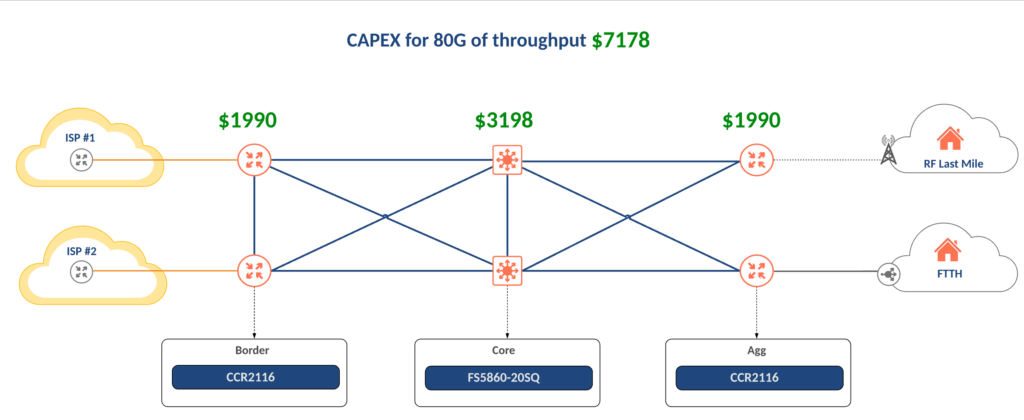

The core design below uses 4 MikroTik CCR 2116 routers and 2 FiberStore FS5860-20Q switches in a stack for a grand total of $7,178 at list price.

If the budget can’t support redundant devices for every function, there is also the option to just build the “A” side of the network with 1 border, 1 core switch and 1 aggregation router. The “B” side can be added later when budget permits.

Conclusions

SWC is a great choice for many WISPs/FISPs and I’ve deployed hundreds of them in the last decade. It’s a mature, stable and scalable solution.

While it’s not the right choice for every network, it’s a solid foundation to consider and evaluate the tradeoffs as compared to other network topologies.

We have actually recently started building like this but are actually in the middle of going one step further and virtualizing all our routers with XCP-NG and Mikrotik CHR. our hardware is refurbed with new warranty applied Supermicro Big twins, Giving us chassis with 4 HW nodes and 1st and second gen Epyc processors for about 3000-4000 euro where each node provides 46 cores 96 threads, 256 gig RAM. we then connect each node with dual 25 gig nics to mikrotik 25 gig switches and 100 gig uplinks to an core 100 gig mikrotik switch.

Doing this design allow us to build a full site with redundancy and all features being virtual except the physical cables in to the SWC switch layer. But everything else happens between virtual routers with virtual recources allowing us on the fly recource allocation and then the L2 is VxLAN private tunneling in the XCP-NG SDN controller.

The future is bright for all of us smaller ISPs with one or two gen older hardware with refurb warranty for virtualization of routers and players like mikrotik bringing the L2 100GbE to us smaller players at very good prices!

Great write up and thanks for reinforcing my decision on our design as the right one.