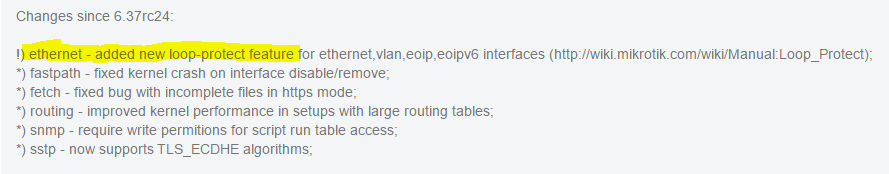

‘Loop Protect’ – New feature in 6.37rc24

Long-time MikroTik users have been after better loop prevention mechanisms for quite a while now. Rapid STP within bridges was the only feature available up until Fall of 2016 and now MikroTik has released Rapid Spanning Tree in hardware for switched ports as well as a new Loop Protect feature that seems to serve the same function as Cisco’s Loop Guard but not utilize spanning tree to detect the loop. MikroTik’s version compares the source MAC of the loop protect frame with the MAC of the interface it is received on and if they match, it will disable the port until the timer expires and check again for the existence of a loop.

This feature was introduced in 6.37rc24 on August 31st, 2016.

http://wiki.mikrotik.com/wiki/Manual:Loop_Protect

Use cases for ‘Loop Protect’

Loop protect seems to be designed more as an edge port protocol since it physically disables the port upon detection of a loop, whereas STP will leave the port physically active but logically block traffic on that path. Some potential use cases for enabling this feature could include:

- Edge port on a MikroTik device facing the end subscriber equipment – this would cut down on loops (and outages) that feed back into the ISP because of subscribers plugging in “dumb” switches, hubs or bridged routers.

- Edge port for an Enterprise or SMB user device to prevent loops causing a larger outage from unauthorized switches/hubs that have been plugged in on the edge port.

- Data Center edge port for servers, routers or other devices that shouldn’t create a loop but still have the capacity to do so. An example would be a mis-configured vSwitch in a hypervisor.

- Downstream switch connected to a router or switch that doesn’t have a physical topology that will allow a loop in normal operation, however, a cable plugged into two ports on the same switch or a down stream switch could still send a broadcast storm towards the port.

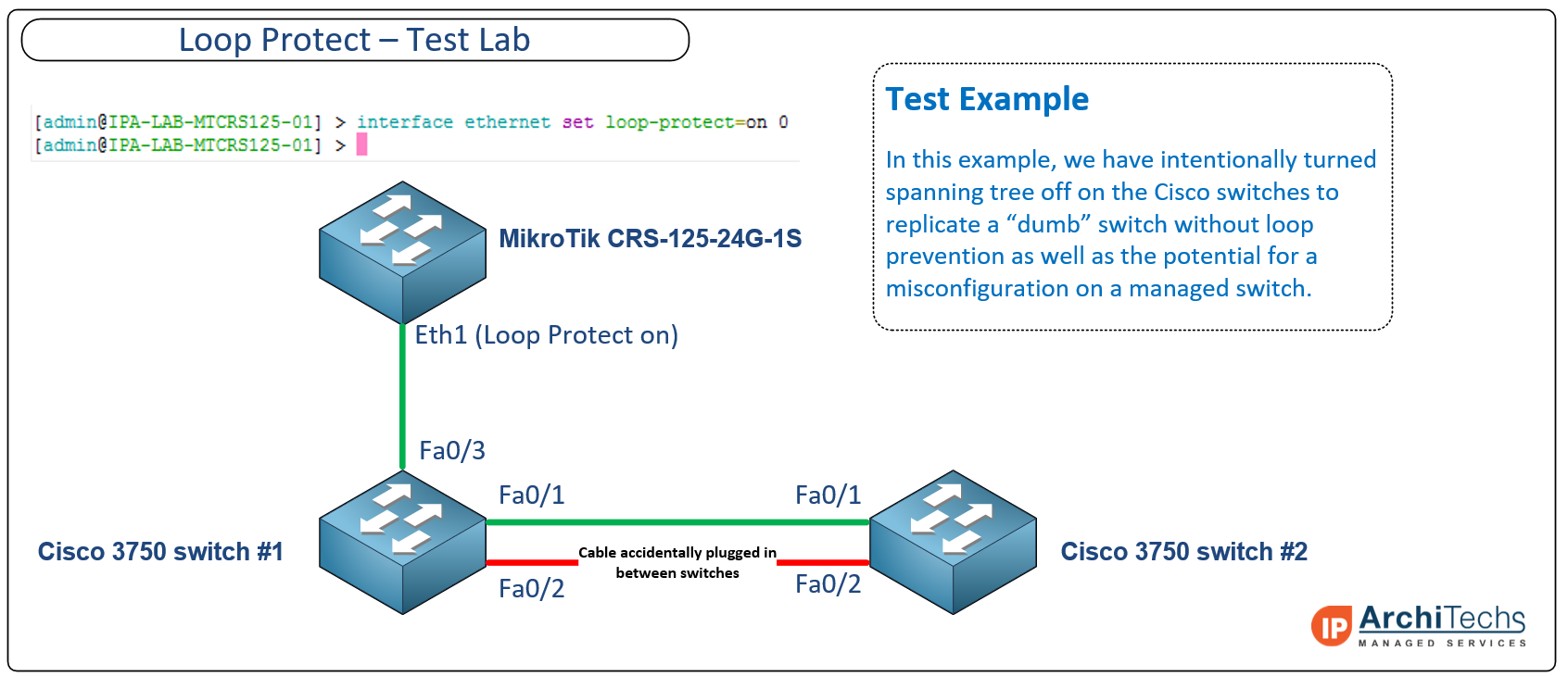

‘Loop Protect’ in the StubArea51 test lab

Below is an example lab we built to test the Loop protect feature. The idea was to intentionally create a loop between two Cisco 3750 switches that would propagate looped frames and broadcasts towards the ethernet port on a MikroTik CRS125 with Loop Protect enabled.

Click on the image for a larger version

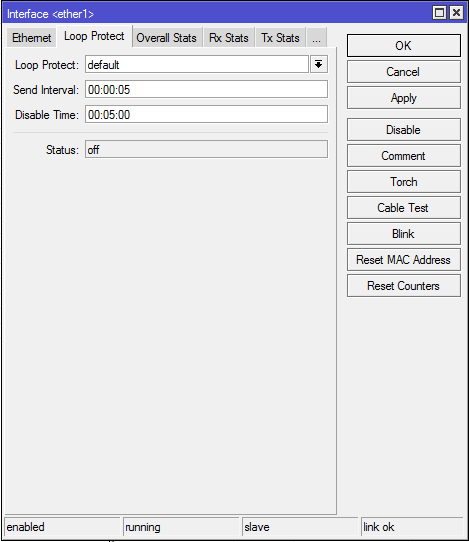

Enabling the ‘Loop Protect’ feature in WinBox

By default, the feature is disabled. To enable it, you select the interface to enable it on –> navigate to the ‘Loop Protect’ tab –> select the first drop down menu and set it to on (some versions of 6.37 have a bug that show more than the three available settings of default,on and off). You can also adjust the ‘Send Interval’ which controls how frequently Loop Protect frames are sent out of the interface. There is a ‘Disable Time’ value that can be set which starts counting down as soon as a loop is detected and will bring the interface back online after the timer is expired and check again for the existence of a loop. This interface will cycle through disabling the interface and the disable timer so long as a loop is present.

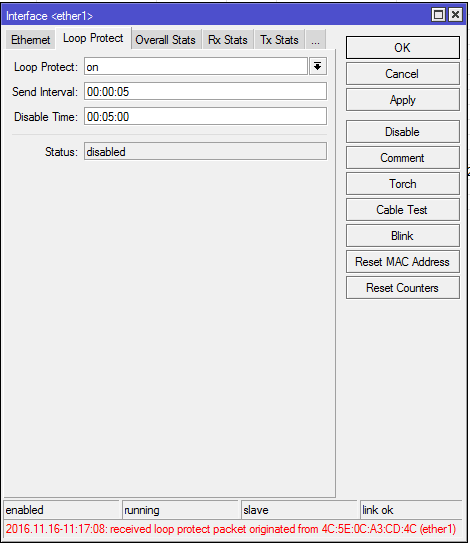

Detecting a loop with ‘Loop Protect’ enabled

In the hardware lab above, we connected a second cable between two Cisco 3750 switches with spanning tree turned off and ‘Loop Protect’ detected the loop almost immediately as indicated by the message in red at the bottom of the picture below. The status has now been changed to disabled until the loop clears and the disable timer expires.

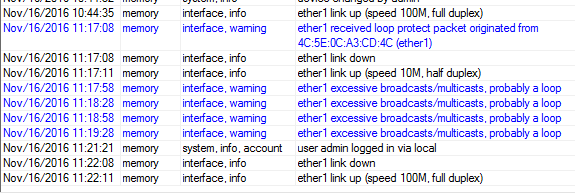

‘Loop Protect’ in the log

Like all good features, ‘Loop Protect” will add status messages to the log which show the following as the loop is detected and then cleared. If you send your log messages to an external syslog server, then you can create alerts to let you know when a port has gone down due to a loop.

- 11:17:08 – Loop is detected

- 11:17:08 – ether1 goes into disabled state

- 11:22:11 – The loop is cleared by the disable timer expiring (after unplugging the rogue cable between switches)