What is whitebox networking and why is it important?

A brief history of the origins of whitebox

One of the many interesting conversations to come out of my recent trip to Network Field Day 14 (NFD14) hosted by Gestalt IT was a discussion on the future of whitebox. As someone who co-founded a firm that consults on whitebox and open networking, it was a topic that really captivated me and generated a flurry of ideas on the subject. This will be the first in a series of posts about my experiences and thoughts on NFD14.

Whitebox is a critical movement in the network industry that is reshaping the landscape of what equipment and software we use to build networks. At the dawn of the age of IT in the late 80s and early 90’s, we used computing hardware and software that was proprietary – a great example would be an IBM mainframe.

Then we evolved into the world of x86 and along came a number of operating systems that we could choose from to customize the delivery of applications and services. Hardware became a commodity and software became independent of the hardware manufacturer.

ONIE – The beginning of independent network OS

The importance of whitebox in 2017 and beyond

Whitebox is critically important to the future of networking because it is forcing all incumbent network hardware/software vendors to compete in an entirely different way. The idea that a tightly integrated network operating system on proprietary hardware is essential to maintaining uptime and availability is rapidly fading.

Tech giants like Google, Facebook and Linkedin have proven that commodity and open network hardware/software can scale and support the most mission critical environments. This has given early adopters of whitebox networking the confidence to deploy it in the enterprise data center.

Whitebox is only a data center technology…right?

White box has been so disruptive in the data center, why wouldn’t we want it everywhere?

As we visited the presenters of NFD14 who had been developing whitebox software and hardware (Big Switch Networks and Barefoot Networks), one idea in particular stood out in my mind – White box has been so disruptive in the data center, why wouldn’t we want it everywhere?

The use case for whitebox in the data center has grown so much in the last few years, that it’s now a major part of the conversation when selecting a vendor for new data centers that I’m involved with designing. This is a huge leap forward as most companies would have struggled with considering whitebox technology as recently as two or three years ago.

A clear advantage to come out of the hard work that whitebox vendors have done in marketing and selling data centers on the proposition of commodity hardware and independent operating systems is that it’s paved the way for whitebox to make it to the branch and edge of the network.

Vendor position on whitebox as an edge technology

The idea that whitebox can be used outside of the data center seems to be prevalent among the vendors we met with during NFD14. This is a question I specifically posed to both Barefoot and Big Switch and the answer was the same – both companies have developed technology that is reshaping the network engineering landscape and they would like to see it go beyond the boundaries of the data center.

I would expect in the next 12 to 18 months, we are going to see the possibility of use cases targeted to the edge and maybe even campus distribution/core. A small leaf spine architecture would be well suited to run most enterprise campuses and the CAPEX/OPEX benefits would be enormous. Couple that with smaller edge switches and you’ve got a really strong argument to make for ditching the incumbent network vendor as well as breaking out of vendor lock in.

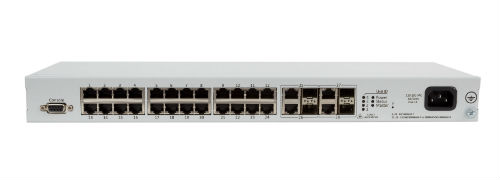

And it makes a lot of sense for whitebox companies to expand into this market, there are already edge platforms in the world of commodity switching that support 48 ports of POE copper with 4 x 10 Gpbs uplinks. Aside from the obvious cost savings, imagine taking some of the orchestration/automation systems that are being used right now for the data center and applying them to problems like edge port provisioning or Network Access Control. Also, being able to support large wireless rollouts and controllers in a more automated fashion would be a huge win.

Role in Network Function Virtualization and the virtual enterprise branch

Network Function Virtualization (NFV) has gotten a lot of press lately as more and more organizations are virtualizing routers, firewalls, wan optimizers and now SDWAN appliances.

One of the endgames that I see coming out of the whitebox revolution is the marrying of NFV and whitebox edge switching to create a virtual branch in a box. The value to an enterprise is enormous here as the underlying hardware can be used in a longer design cycle while the software running on top can be refreshed to solve new business requirements as needed.

The other major benefit of hardware abstraction is that it simplifies orchestration and automation when interfaces are VLAN based and not tied to a specific port/number.

What are the challenges to getting whitebox into the hands of the average enterprise for edge or campus use?

While not an exhaustive list, these are a few of the challenges to getting whitebox into the average enterprise.

- Perception is one of the key challenges to moving towards whitebox in the edge. Most enterprises tend to be hesitant to be early adopters unless there is a clear business advantage to doing so.

- Vendor lock in is another major barrier as most enterprises tend to stay within one vendor for routing and switching.

- Confidence is a key part of the hardware selection process and this is an area that I feel whitebox is gathering serious momentum. And that will help make the edge case an easier sell when it becomes a more common use case.

- Training and Support is always one of the first questions asked by a network team to see how easy it will be to support and get the techies up to speed on care and feeding of the new deployment. This is another area of whitebox that has seen a huge amount of growth in the last few years. Big Switch has a fantastic lab for learning data center deployments and hopefully as the use cases expand, we’ll see the same high quality training for those areas as well.

Closing thoughts

Whitebox at the edge is closer than ever before and there are small pockets of actual deployments which are mostly in the service provider world.

However, we’ll likely have to wait until the use cases are specifically developed and marketed towards the enterprise before there is significant momentum in adoption. As a designer of whitebox solutions, it’s something that i’ll continue to push for and evaluate as it has the potential to make an enormous impact in enterprise networking.

That said, the future of whitebox is incredibly bright and 2017 should bring a significant amount of growth and more movement towards commodity switching as a mainstream technology in all areas of networking.